Contact us

Our team would love to hear from you.

Generative artificial intelligence, also known as generative AI or GenAI, is disrupting all aspects of our lives at an unprecedented pace and is poised to push the boundaries of how we interact with technology and approach content creation. According to a study of sixty-three use cases by consulting firm McKinsey & Company, GenAI will significantly influence all industries and add up to $4.4 trillion annually to the global economy.

This guide offers an in-depth look at generative AI technology, explaining how it works, showcasing the pioneers behind the technology, and exploring generative AI models and core tools along with their respective use cases.

Generative AI is an innovative technology designed to easily generate content, including images, videos, text, audio, software code, 3D models, and more, hence the term ‘generative’. Unlike predictive machine learning (ML) models that identify data patterns and make forecasts based on these patterns, generative AI models are trained on large amounts of input data to learn not only intricate patterns but also relationships between data types and use this information to create new outputs. GenAI models can reflect the properties of the input data in response to prompts and turn text inputs into an image, an image into a song, and more.

By allowing for the creation of highly realistic and sophisticated content, GenAI opens up infinite opportunities for numerous businesses across various sectors. Harnessing the power of generative AI not only drives innovation, heightens creativity, and increases productivity but also dramatically improves customer experiences, streamlines business operations, and helps extract valuable insights, paramount for enhancing decision-making processes.

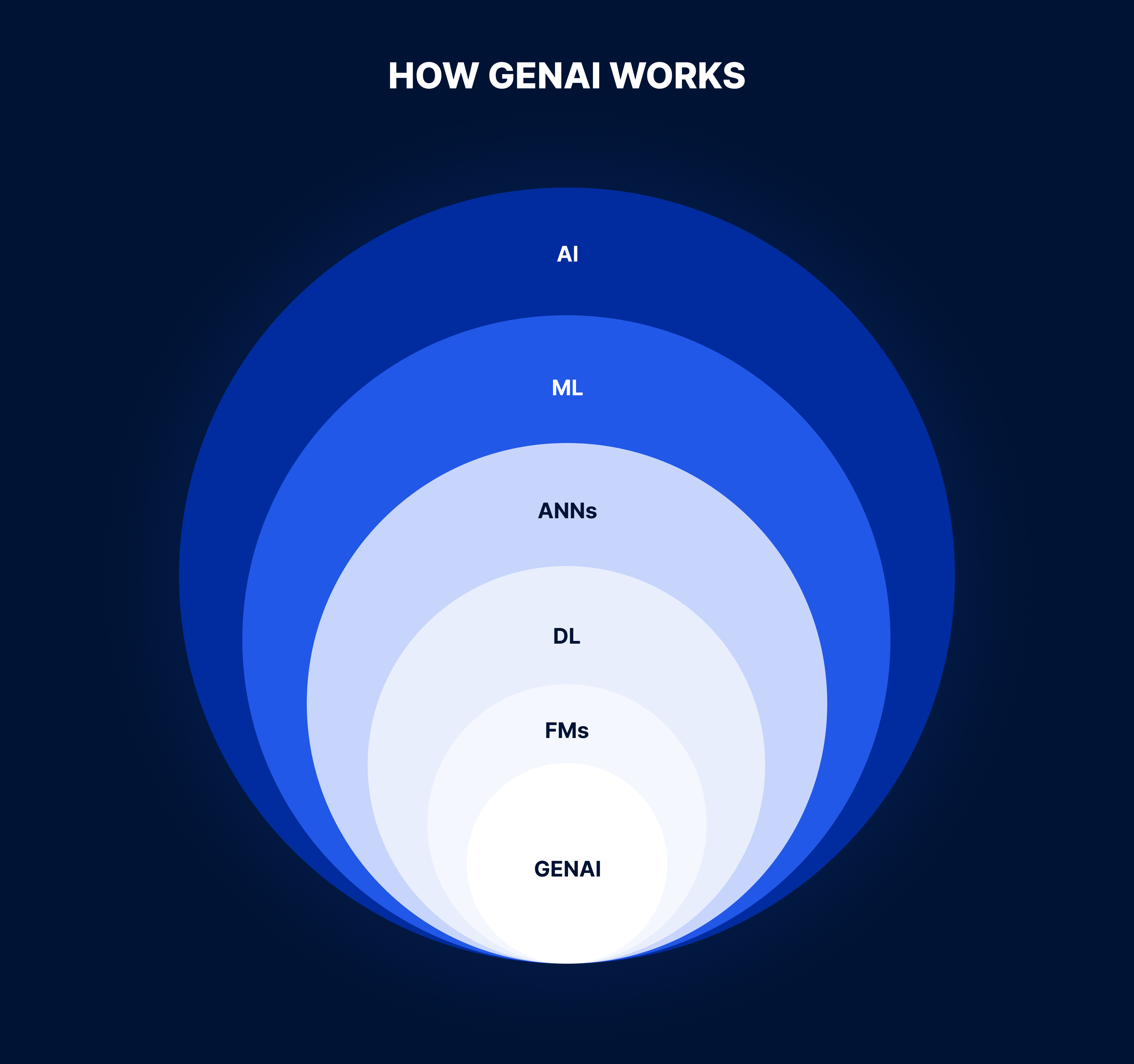

Generative AI is rooted in ML, artificial neural networks (ANNs), and deep learning (DL) — all subsets of AI. While ML algorithms enable machines to learn from data, ANNs are computational models that imitate the way nerve cells work in human brains, forming neural network architectures known as “transformers.”

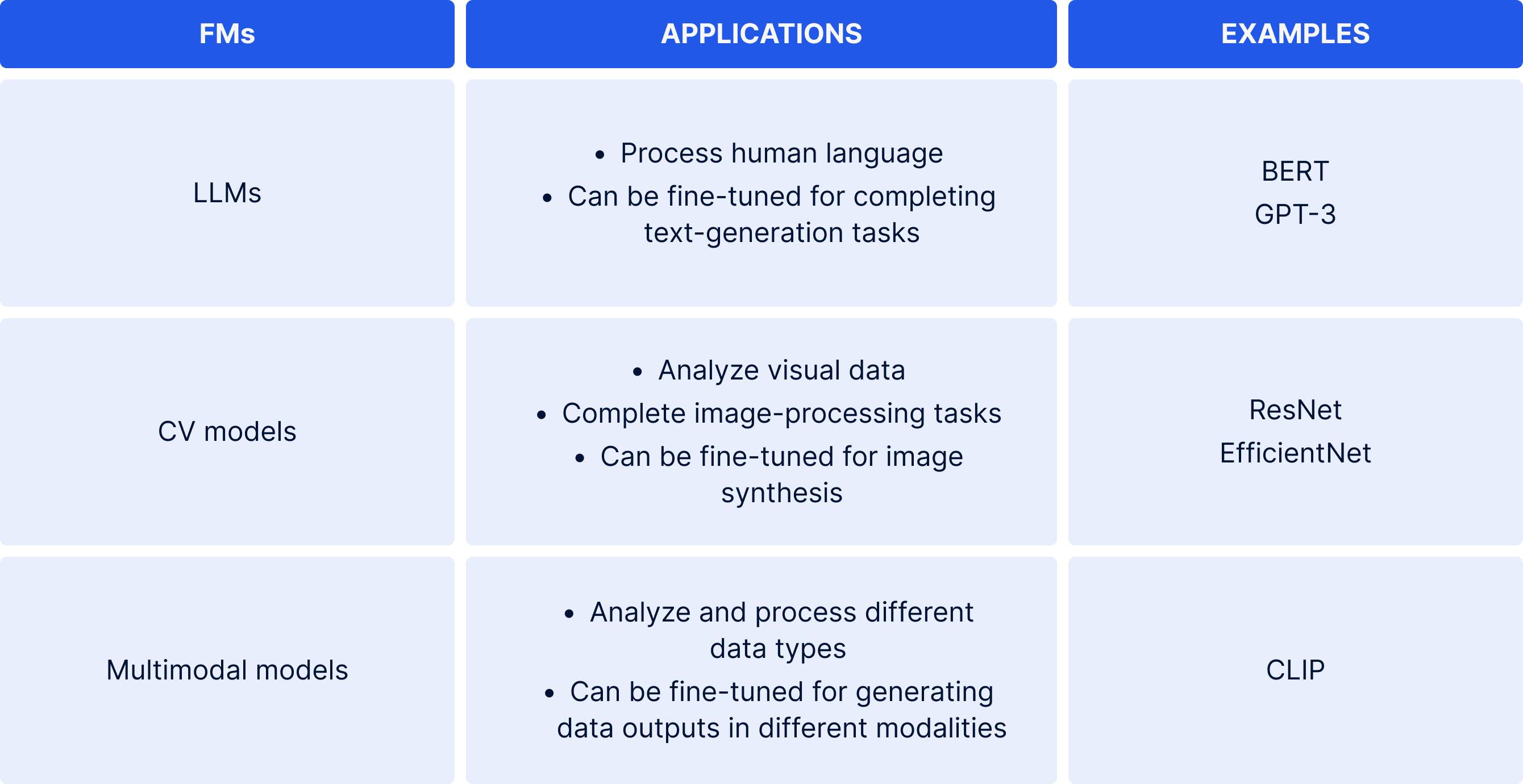

DL uses ANNs to process large amounts of unstructured data, identify underlying structures and patterns in this data, and learn from them. It’s commonly used in fields like computer vision, natural language processing (NLP), speech recognition, facial recognition, and more. DL algorithms, combined with an unsupervised learning approach, underpin the development of foundation models (FMs). Part of the DL realm, FMs are large-scale neural networks trained on large amounts of unlabeled data, serving as a basis for multiple tasks. Therefore, rather than training a new AI model each time it’s necessary to perform a specific task, a pre-trained FM can be utilized and fine-tuned for a particular application—be it language translation, image recognition, or content generation. FM classifications vary widely but can be broadly categorized into:

FMs offer truly limitless opportunities. Most importantly, AI foundation models lay the groundwork for building more advanced generative models, which empower generative AI technology and handle specific content-generation tasks.

Designed to produce original content, generative AI models typically fall into the following categories:

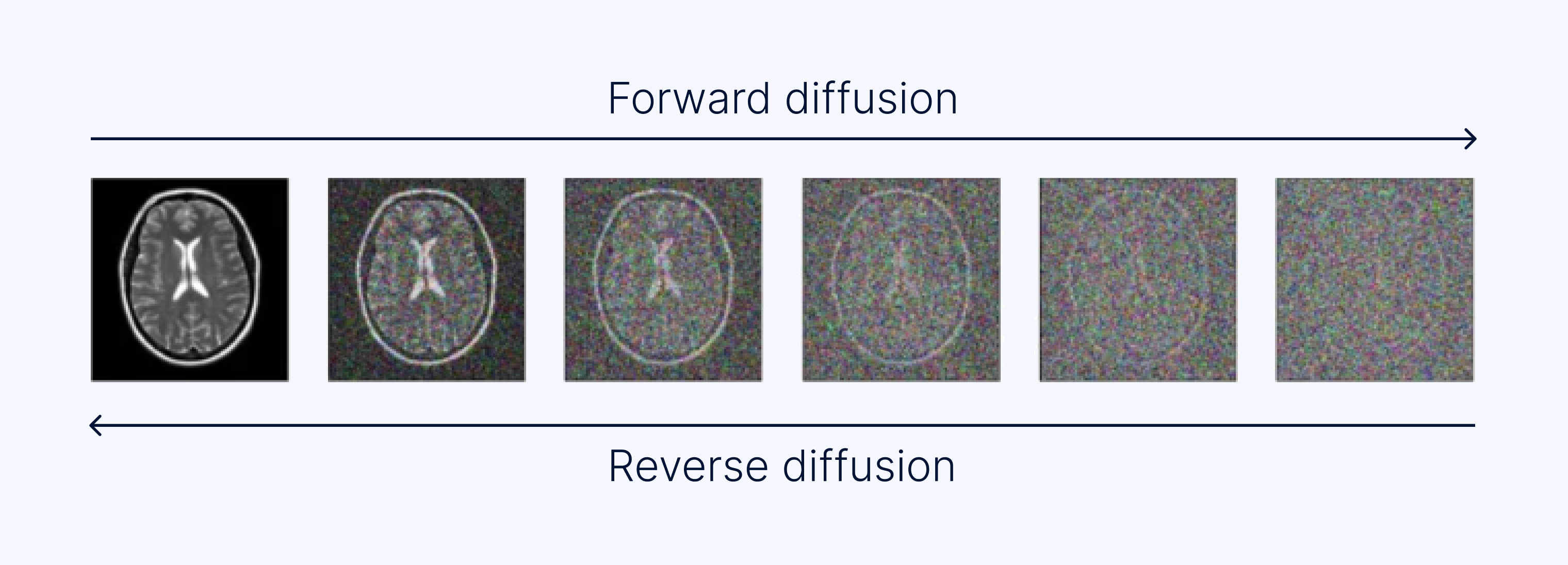

DDPMs, otherwise known as diffusion models, generate new data by following a two-step training process: forward diffusion and reverse diffusion. In essence, forward diffusion applies a sequence of controlled, subtle changes in the form of random noise to the training data. After several iterations, the noise destroys the training data and the reverse diffusion process occurs, gradually removing the noise to reconstruct the original data. During training, the model applies the same process to new data inputs to generate novel data outputs. Diffusion models can assist with various tasks, such as inpainting, focused on filling in damaged or missing parts of an image; outpainting, or continuing an image beyond its original borders; and generating high-quality, coherent images. Other applications for diffusion models include 3D modeling, medical diagnosis, and anomaly detection.

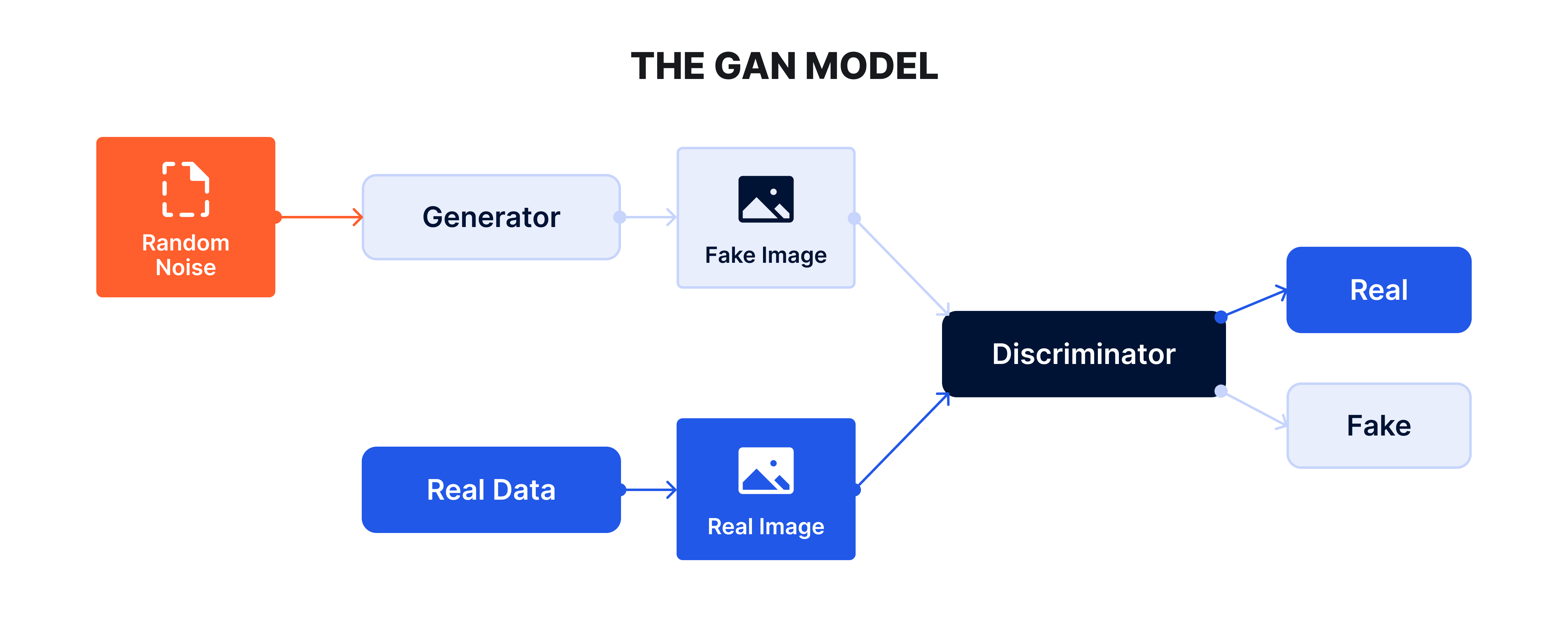

Built upon the diffusion model concept, the GAN model entails training two submodels: a generator and a discriminator. By adding random noise, the generator produces fake data samples while the discriminator endeavors to differentiate between real data and the fake data created by the generator. This adversarial training continues until the generator is capable of producing authentic-looking data that the discriminator can no longer distinguish as fake. GANs are powerful tools for a wide variety of GenAI tasks, spanning from generating human faces, images, and cartoon characters to image-to-image and text-to-image translation. One prominent example of how the GAN model is being used is in generative engine tools, capable of producing highly realistic images based on text inputs.

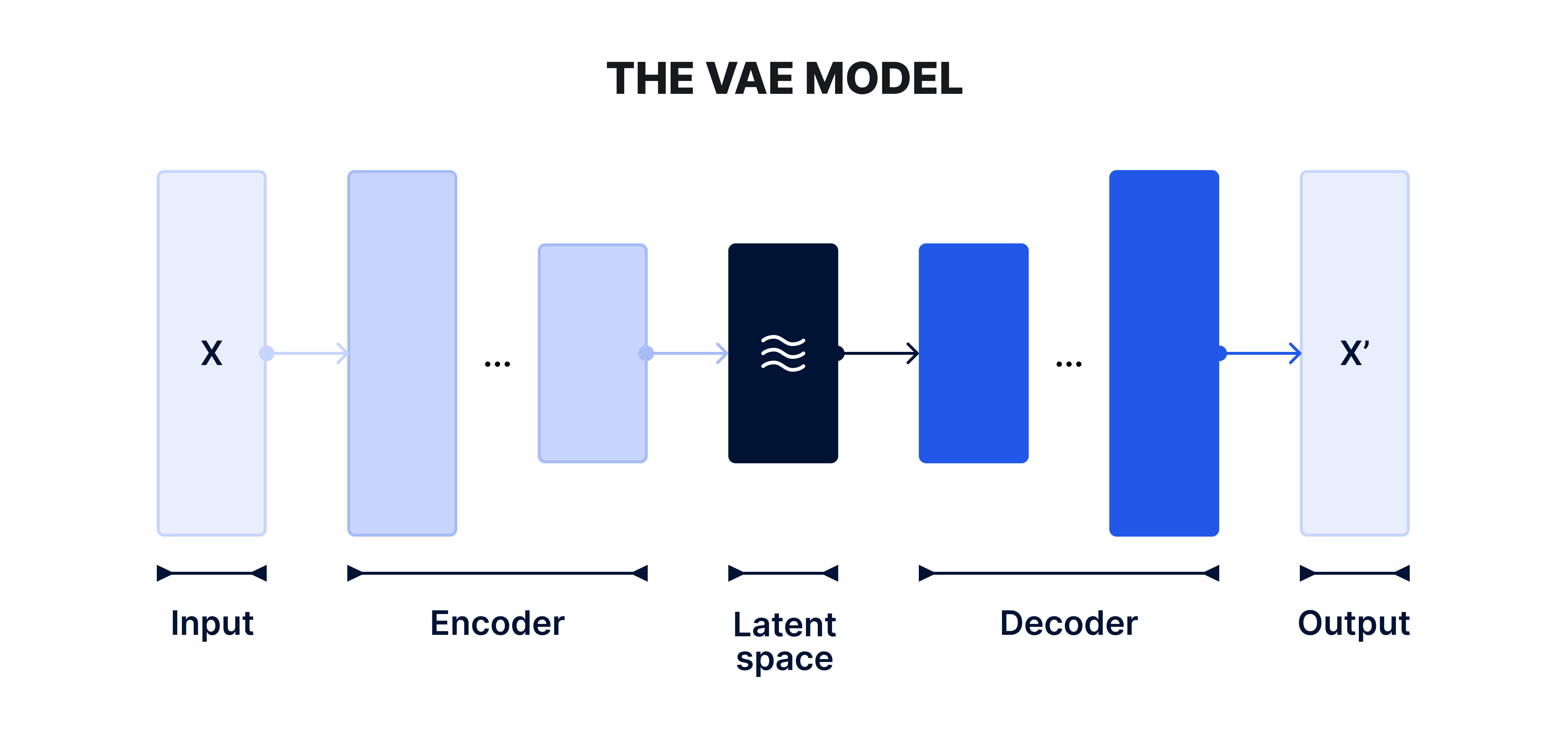

The VAE model of generative technology leverages encoder and decoder neural networks and is closely connected with the DL concept of latent space, a compressed representation of input data based on all its attributes. Thus, if the model generates faces, the latent space will contain features, such as gender, facial expression, age, and more. Initially, the encoder compresses input data into a smaller latent space representation. The decoder then takes this sample from the latent space and reconstructs it back to novel data resembling the original input. VAEs are powerful tools for generating realistic human faces and images, text generation, music composition, treatment findings, and drug discovery.

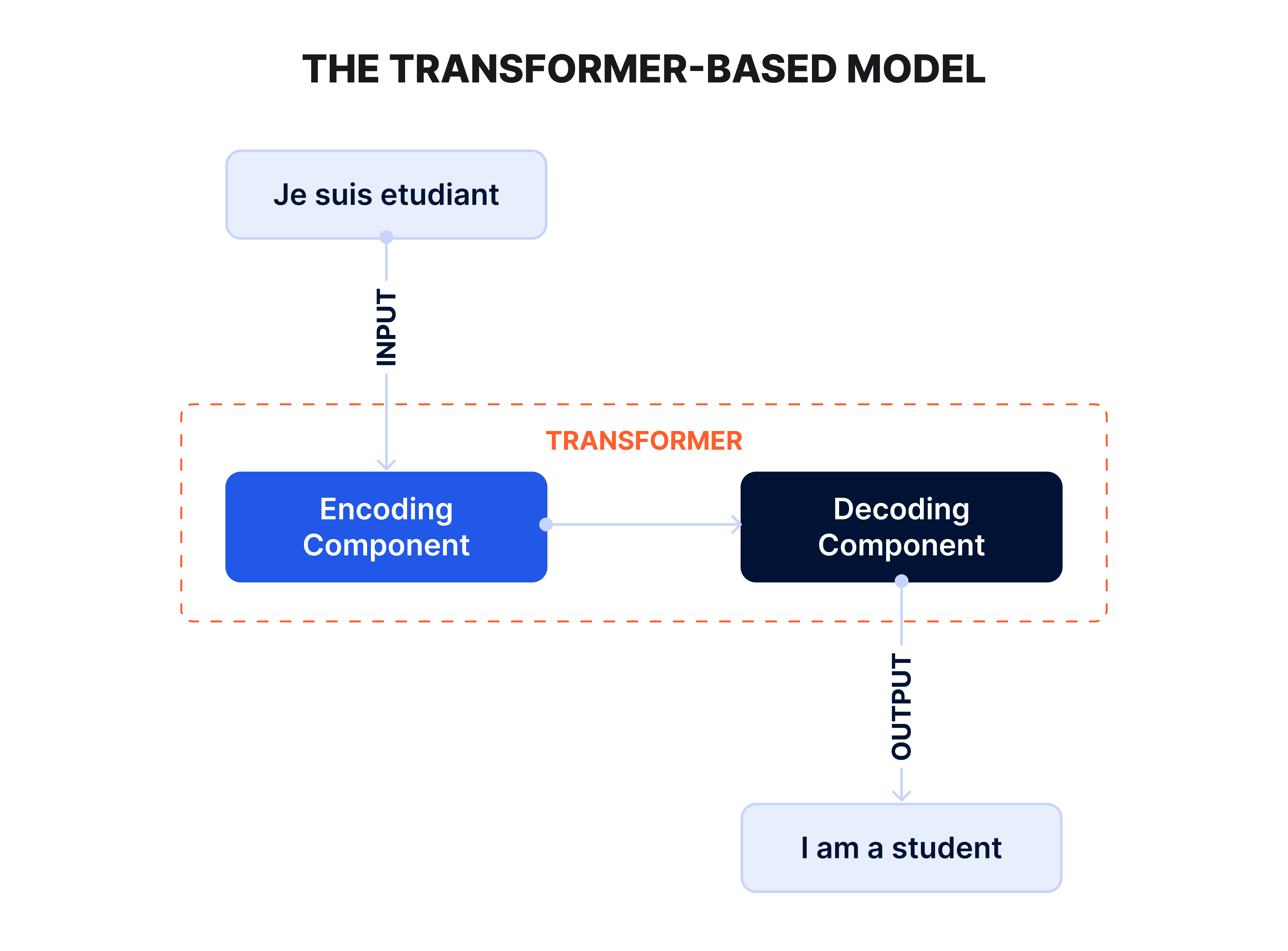

Transformer-based models excel at NLP tasks, such as language translation and answering questions. They are built on the transformer architecture, comprising two parts: an encoder that processes an input sequence and a decoder that generates an output sequence based on encodings. Transformers are trained on large amounts of unlabeled data to comprehend and interpret contextual relationships between sequential data. Their compelling advantages include the ability to run several sequences in parallel and process sequential data non-sequentially. Typically, transformers can understand the structure and context of language by capitalizing on two essential mechanisms: self-attention and positional encodings. Self-attention enables transformers to identify context, which helps bring meaning to each word in the input sequence. Positional encodings, in turn, represent the position of each word in a sentence, which is particularly crucial for language tasks as transformers don’t have an inherent sense of word order. Transformer-based models have paved the way for the rise of the GPTs leveraged in a wide range of text-generation applications.

The GenAI market is growing in leaps and bounds, continuously revolutionizing every aspect of our daily lives and holding immense potential for businesses. The transformation of this awe-inspiring technology from idea into reality would not have been possible without the substantial efforts and investment of several technology giants. Without further ado, let’s explore the major tech providers in the generative AI market.

OpenAI, in alliance with Microsoft, is at the forefront of GenAI and is widely known for having developed the powerful LLMs—GPT-1, GPT-2, GPT-3, and GPT-4—behind the ChatGPT app. In addition, OpenAI grants developers an opportunity to leverage ChatGPT API to integrate the ChatGPT model into the software products they build. This same organization also built DALL·E, DALL·E 2, and DALL·E 3 image generation models that instantly garnered public attention by unlocking numerous opportunities to amplify human creativity.

Google is another key player expanding the boundaries of the GenAI field. The company built the PaLM and Gemini LLMs, lying at the core of the Bard conversational AI app. The app specializes in processing and understanding various languages, recognizing all types of inputs, and responding to queries via different modalities.

Amazon Web Services (AWS), in collaboration with Hugging Face, leverages the power of LLMs and CV models to create a plethora of generative AI apps with the capability to perform multiple tasks, from text summarization to image creation and code generation. AWS also provides the Amazon Bedrock services, which help build generative models on top of FMs from prominent AI companies.

A game-changer in the generative AI market, Adobe has crafted its own generative AI technology, known as Adobe Sensei GenAI. Sensei GenAI is integrated with Adobe Experience Platform (AEP) and employs multiple LLMs to empower businesses to accomplish a wide variety of text-based tasks. By leveraging Sensei GenAI, organizations can consolidate all their data and train GenAI models based on proprietary customer insights, thereby refining their marketing efforts, boosting productivity, and delivering personalized customer experiences.

NVIDIA, a prominent player in the GenAI realm, is a key provider of hardware for a wide range of AI solutions. The company is renowned for its graphics processing units (GPUs), which are essential for training and running generative models. In addition, NVIDIA has significantly contributed to the development of various AI tools and generative models, including GANs.

IBM uses its Watsonx.ai platform to train FMs and then leverage them to build various GenAI models. Its generative models can produce synthetic data and are applied in speech analysis, image generation, and the creation of industry-specific text content, including lesson plans, marketing emails, social media posts, etc.

Companies, equipped with the right generative artificial intelligence tools, can seize exciting opportunities to optimize business processes, drive efficiency, and remain competitive in the constantly evolving market. Several technology giants have emerged as defining forces behind the power of generative AI. Below we take a look at the most groundbreaking GenAI tools developed by OpenAI in cooperation with two of these competing behemoths — Microsoft and Google — along with their real-life use cases.

Launched in November 2022, ChatGPT is a chatbot app built upon the GPT-3, GPT-3.5, and GPT-4 LLMs, which have been trained on terabytes of data to perform various language processing and text-based tasks. ChatGPT demonstrates exceptional versatility in generating human-like text, providing contextually appropriate answers, and offering valuable support in a multitude of language tasks. Its abilities range from having casual conversations with users to writing different types of copy, such as songs, poems, and stories, to explaining software code to telling jokes. It’s worth noting that the GPT-4 model has a new ability to take in images and answer related questions, which is why it’s referred to as GPT-4 with Vision or GPT-4V. In addition, users of iOS and Android devices can have voice interactions with the latest GPT-4V version.

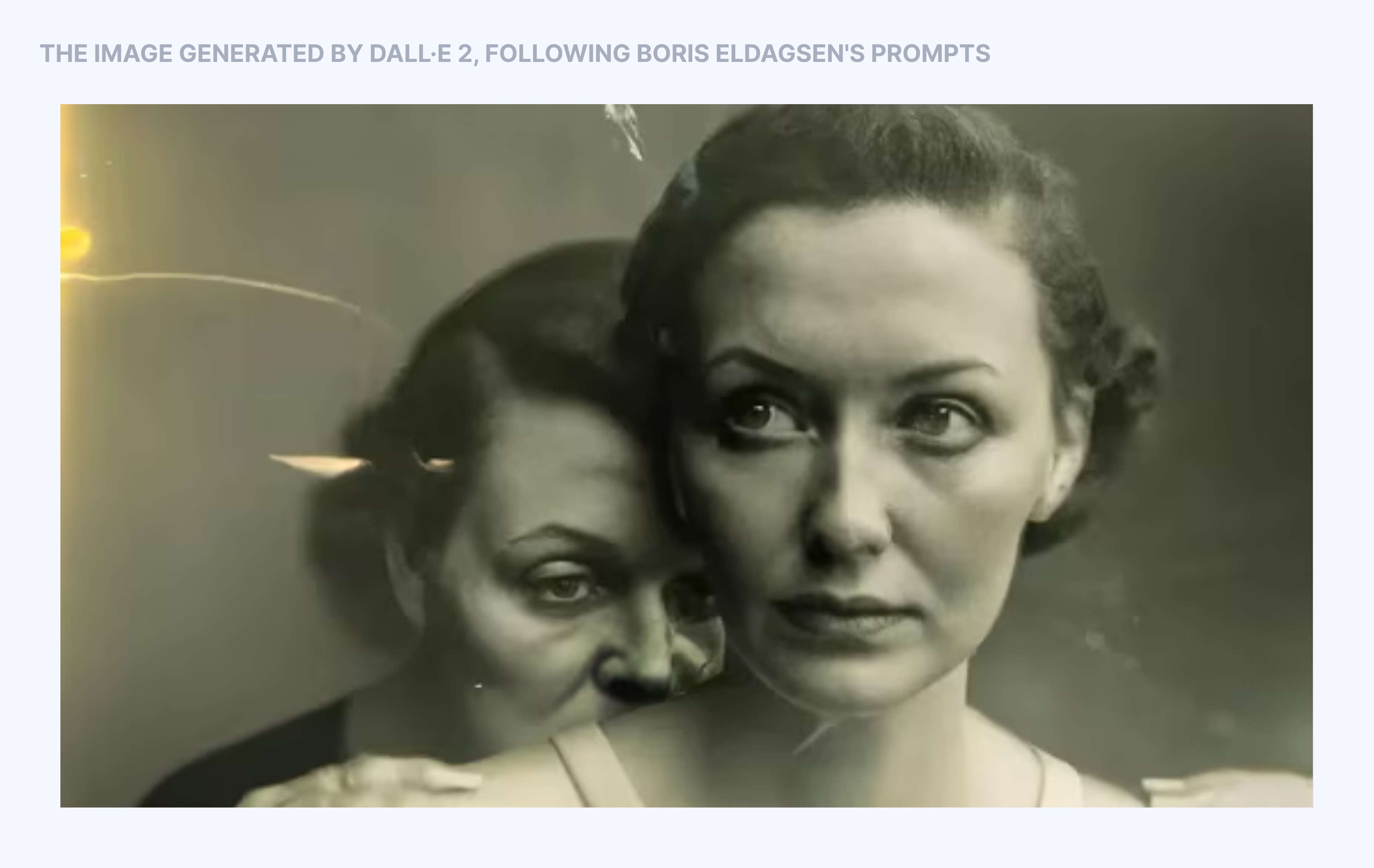

DALL·E is a multimodal AI system built on GPT-3 and GANs that combines language and visual processing to render relevant images in response to text prompts. Along with its successors, DALL·E 2 and DALL·E 3, DALL·E lets users unleash the power of creativity and generate a multitude of images in various styles, including anthropomorphized objects and animals, combinations of unrelated concepts, emoji, and more. The DALL·E tool can empower businesses in any industry and has found a wide range of generative AI applications, including ideating product design, creating custom art, crafting visual aids, and shaping a strong brand identity.

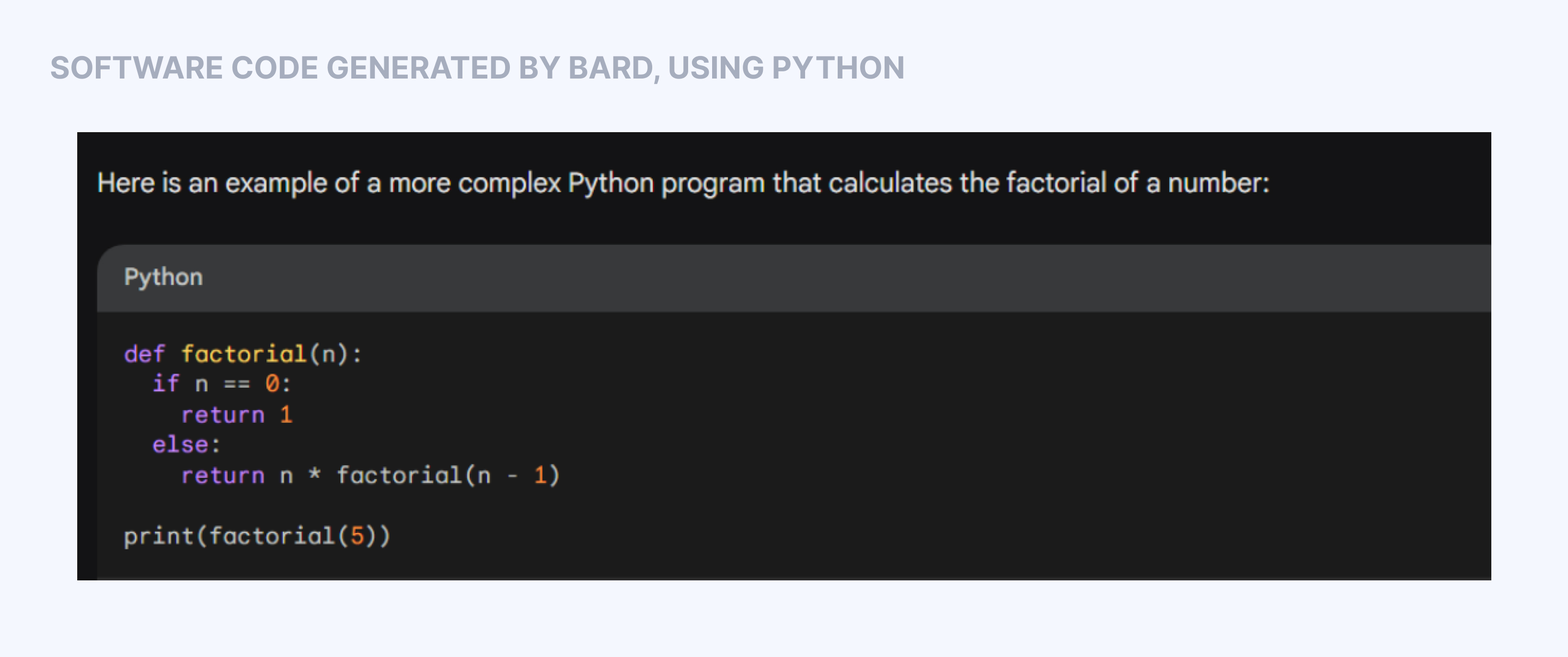

Released in March 2023, Bard is an AI-powered chatbot and content-generation tool built on the Pathways Language Model 2 (PaLM 2) and Gemini multimodal LLM. In essence, PaLM 2 is a next-gen LLM that has been trained in over one hundred languages and demonstrates improved logic and reasoning capabilities while Gemini focuses on multimodal processing and deep contextual understanding. Standing as Google’s response to the introduction of ChatGPT, Bard is suitable for user interactions via voice, text, and images. This efficient tool shows the ability to provide access to up-to-date information in real time and deftly handle virtually any task, be it answering users’ questions, generating creative text content or computer code, brainstorming creative ideas, or even planning trips. Using the Bard chatbot is highly beneficial if businesses want to automate their operations, optimize marketing strategies, conduct market research, and enhance customer experiences.

GitHub Copilot is a cloud-based GenAI tool for software code autocompletion and generation developed by OpenAI in partnership with GitHub. Powered by the OpenAI Codex AI model, this tool enables developers to understand the context of the code they’re writing, suggests relevant completions, and generates code in any programming language, including Python, JavaScript, Go, Ruby, and more. The capabilities of this powerful instrument also extend to code refactoring, making it easy to improve existing code while keeping the software functionality intact. By incorporating GitHub Copilot in their daily workflow, software engineers can minimize errors, enhance code quality, increase their productivity, and expedite software development.

GenAI is no longer a science fiction concept only encountered in books, but rather an accessible reality and a game-changer in how we produce novel content and solve mundane tasks. It’s possible to find generative AI examples in everything we do—be it generating images, audio, and text or leveraging this technology to elevate customer experiences—and its impact on the entire world, let alone the business sector, is tremendous. If your company wants to stay ahead of the pack and embrace GenAI soon to bring business operations to the next level, partnering up with an experienced and innovative technology company is a strategic move. Get in touch with our expert team and discover the limitless opportunities that GenAI can unlock for your business!

Our team would love to hear from you.

Fill out the form to receive a consultation and explore how we can assist you and your business.

What happens next?